Conducting log file analysis for enterprise SEO

Log file analysis might sound like an unfamiliar and daunting prospect if you’re not deeply technical. However, it can be a real game changer for elevating the crawlability and visibility of your key commercial pages and beyond.

For enterprise-level sites or large eCommerce sites, keeping an eye on how Google is interacting with your log files and handling certain areas of your site can be an eye-opener in ensuring your priority pages are being pinged. Let’s look at how to get the most out of it.

What is log file analysis in the context of SEO?

Log file analysis, or server log analysis, is the process of analysing how certain event logs from the Googlebot user agent (or that of another search engine) interact with different areas of your site and how frequently they do this. It can be a vital tool for understanding what areas of your site search engines appear to be prioritising in terms of visits from crawlers and, conversely, what crucial areas they may be neglecting.

Search engines use crawlers known as bots to continually and automatically crawl the web to discover, crawl and index web content. This data is shared publicly and can be accessed in website server logs.

Log files can be accessed on your server and should be made available to you by your developer or engineering team. Depending on which type of server or CDN you’re using, server log locations may differ, though this information should be readily available on your supplier’s websites.

The data included in server log files includes:

The URL path of the resource pinged

The user agent (Googlebot or otherwise)

The IP address of the user agent

The time stamp

The status code of the server response

We’ll break down what this looks like further on in this article.

Crunching this data can help SEO teams identify patterns of search engine crawl behaviour, with a view to either deterring it from crawling low-value or invalid resources, or directing it to more important resources such as commercial pages or leading guide content. You might also identify other technical issues that aren’t too apparent, with server log analysis exposing instances of search crawlers interacting with redirecting or broken resources deep within your site.

You can also access Google Search Console to get some insights into how Google is crawling your website and what types of status codes and resources are being pinged. Navigate to the “Settings” icon in the bottom left of the tool and then you can then access the crawl stats report:

This report won’t be as comprehensive as a full log file analysis project, but it can be a useful resource nonetheless in terms of getting started.

The importance of log file analysis for enterprise brands

Enterprise brands, or big eCommerce brands with large product inventories and URL resources spanning across many global variants, face a lot of challenges within their SEO programmes. Whether it’s perfecting international SEO across their departments or sourcing the right technology for enterprise SEO, there’s a lot to consider in order to drive organic growth.

Outside of the website, large brands with a global footprint often face challenges with teams operating in silos. This can create schisms in ensuring a centralised best practice SEO programme, among other things.

However, given enterprise websites are often templated in structure and design across global variants, log file analysis can be a vital tool to identify universal issues and recommendations that can be rolled out on a global basis.

Back to log file analysis and the role it plays in enterprise SEO. Imagine a global eCommerce brand selling thousands of products spanning different categories, all with regional variants in terms of localised pages. An extreme example of this might be Amazon. Think about how many product URLs, URL variants and resources across CSS and JavaScript search engines must consider when crawling such a mammoth site.

From gathering data across a specified period of time, thorough log file analysis can be used to identify clear trends in sections or URL categories of your site in terms of how search engines are interacting with them.

For example, you might be experiencing visibility and conversion issues on certain product pages that may be hidden deep within your site architecture. Log file analysis can be used to present empirical evidence to senior teams if it’s clear that bots aren’t crawling these pages as they should, and whether steps should be taken to improve this. The same can be said for any URL or subcategory across your site, whether it’s a new product range or a blog series that was created to bring in a good share of organic traffic.

On a wider basis, log file analysis can also be used to build a case for site redesigns and a re-thinking of site taxonomies, and how they are set up for localisation and international SEO.

Over time, some large enterprise sites may have followed a website growth path that may not be sustainable for SEO and UX purposes, with additional sections bolted on and not following a logical URL folder structure. This can be a very normal occurrence, though it may hinder visibility on certain sections of your site if the URL structure of old and new content isn’t logical for search engines and users. Log file analysis, along with other SEO metrics such as keyword rankings and organic traffic, can be a great door opener in providing bulletproof, data-led evidence to address such issues for the benefit of a business.

How to interpret log files and how they can help

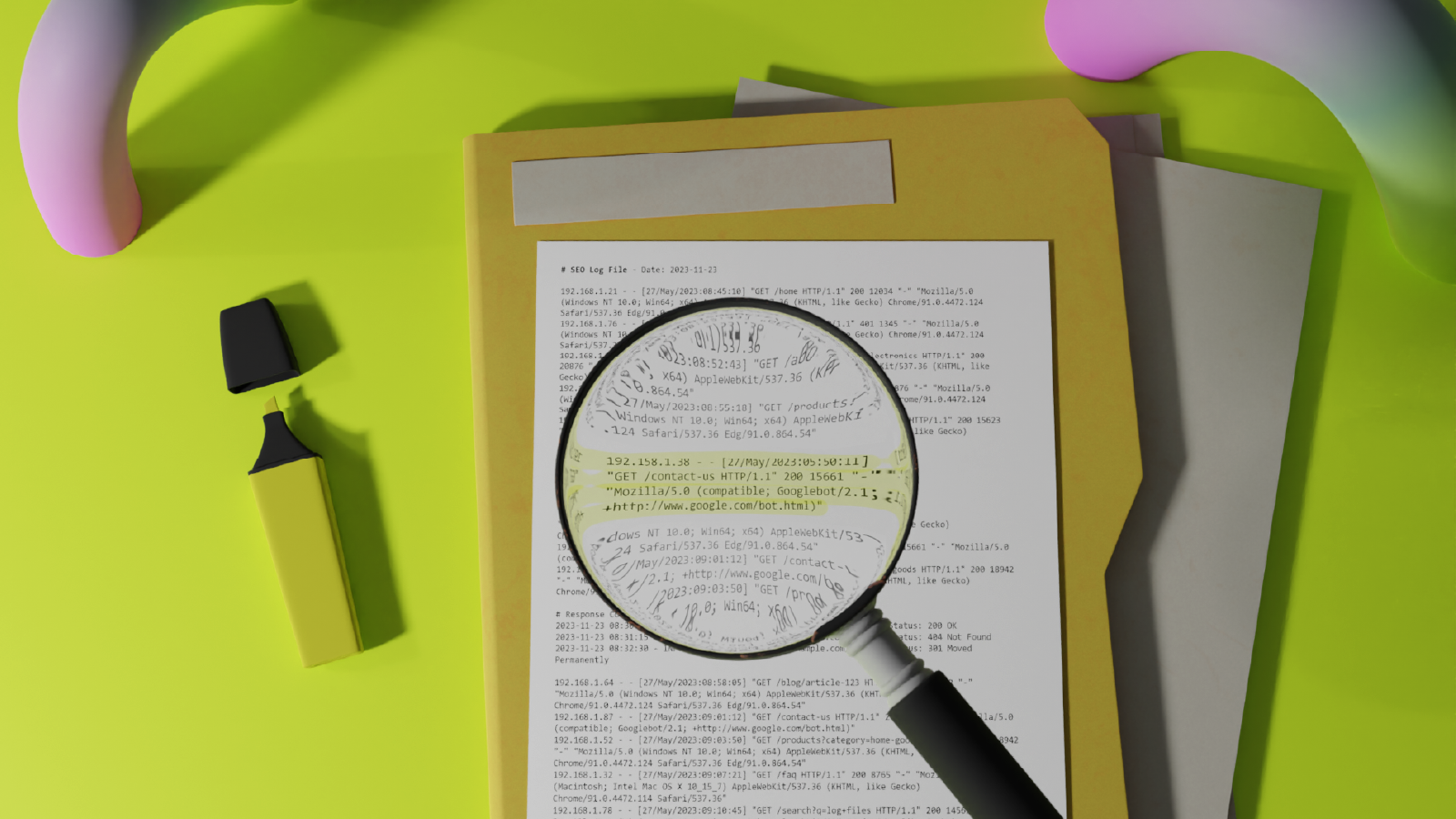

Here’s an example of a typical log file:

192.158.1.38 - - [27/May/2023:05:50:11] "GET /contact-us HTTP/1.1" 200 15661 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"

To break this down, we have:

192.158.1.38 – the IP address of the requester

[27/May/2023:05:50:11] – the timestamp of the request

"GET /contact HTTP/1.1" – the HTTP method and version used, as well as the resource requested (in this case the “/contact-us” page of a site

200 – the status code of the resource requested (200 means successful)

15661 – the resource size in bytes

"Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" – the requester's user agent, which in this case is Googlebot

The format of a log file may vary. However, you can quickly start to gather trends from exporting lines of data from server logs over an extended period of time, say six months, and then filtering down so you’re only viewing requests from search engine bots.

This will need to be filtered, spliced and visualised within Excel (or your reporting platform of choice). Very quickly, you’ll be able to spot vital data such as:

URLs and website directories that are being prioritised over others and how this aligns with your business goals

Wasted crawl budget on low-value URLs and resources

Orphan pages, redirects and 404s that are being repeatedly crawled in comparison to pages with a 200 status code

Whether or not any noindex or canonical URL implementations are being respected accordingly

Understanding the correlation between crawl behaviour and how long it takes to deliver organic traffic and results

There are plenty of ways to visualise such data, marry it up with the other core metrics you’re tracking, and present it back to your team.

What tools can help me with log file analysis?

While a skilled user of Excel will be able to export and format log file data from its raw source and quickly visualise it into insightful analysis, there are plenty of tools available to help you speed this up. A key thing to remember is that log file analysis isn’t a one-off exercise; ideally you’ll want to feed in log file data and analyse it on an ongoing basis.

Popular SEO tool suites such as Ahrefs and SEMRush have their own built-in log file analyser tools. You can simply drop in your log files and then these tools will develop reporting and insight dashboards highlighting key findings. This can be very useful for getting quick visualisations of status code types being crawled, crawl frequency across URLs, resources and directories, orphan URLs, and more. Screaming Frog also offers a separate log file analyser software that allows you to run an analysis of log files within the familiar layout style, similar to their crawling software.

For enterprise sites, larger SEO suites such as Botify and seoClarity also provide in-depth log file analysis features, allowing you to quickly visualise your log file data alongside the usual technical SEO analysis on an ongoing basis.

What should I do with the data from log files?

Let’s look again at some of the discoveries you might make during the log file analysis process, plus some recommended next steps.

URLs and website directories that are being prioritised over others, and how this aligns with your business goals

This can often be a quick verification exercise whereby you simply marry up data, such as organic visits and conversions, alongside the pages or directories which are receiving the most requests from search crawlers. Also, any theories around faltering organic performance on crucial money pages can be consolidated if they’re not being pinged on a regular basis.

If there are some clear anomalies, assess ways in which you can improve your content offering and, crucially, improve internal linking signals to these pages. Are they linked to in the nav, footer or secondary nav? Is there a breadcrumb structure in place? What about internal links to these pages from other key pages across your site? All of this will help improve signals to Googlebot and other search crawlers to crawl them on a more regular basis.

Wasted crawl budget on low-value URLs and resources

Marrying up your data again, assess the instances of search engine crawlers requesting these low-value pages in comparison to your more crucial ones. Are there any pages here that shouldn’t be in the index? If so, take steps to either redirect them, remove them or noindex them from search results. You can also discourage Googlebot to ignore crawling such URLs by marking up internal links to them with the nofollow attribute.

Again, if you’re seeing instances of priority content being ignored in favour of pages of lower stature, then assess ways in which you can enhance their prominence on your site via structural and internal linking enhancements.

Orphan pages, redirects and 404s that are being repeatedly crawled in comparison to pages with a 200 status code

We’ll focus on orphan pages here, as 301 redirects and 404 pages can largely be managed using similar means around the indexation management and internal linking methods detailed earlier. Orphan URLs relate to pages that have no internal links pointing to them, and sit “orphaned” outside of your main site structure or XML sitemap. These can often be quick-win opportunities, particularly if they’re pages of high commercial value or are useful content. If they’re getting organic traffic or have existing keyword rankings and you decide to keep them, then bringing them into your site structure via the means of internal linking is a solid move. If you’re discovering orphan URLs (or indeed 301 redirects) that are being repeatedly pinged by search crawlers that aren’t objectively of use, then consider removal.

Whether or not any noindex or canonical URL implementations are being respected accordingly

Ideally, if you’re performing log file analysis and you’re already mindful of indexation management and the need to manage crawl budget through noindex tags or other means, then this shouldn’t be an issue. However, with Google and other search engines, this might not always be the case. This is where log file analysis can really help in understanding whether noindex, nofollow directives on content you don’t want seen on search engines have been implemented properly or are being respected. If they’re not, then reassess your implementation, or perhaps take stronger steps, such as blocking certain pages in your robots.txt file or submitting removal requests to Google Search Console.

Understanding the correlation between crawl behaviour and how long it takes to deliver organic traffic and results

Aside from technical troubleshooting and opportunity analysis, an under-utilised area of log file analysis is around performance forecasting. For example, you can follow a paper trail of data stemming back from when a URL was first published and when it was first crawled in your log file data, potentially understanding how long it takes Google to index new content on your site. Moving further down the funnel, you can then add on further metrics such as search impressions and clicks, and earmark dates when the new page in question started showing significant organic traffic. This can be very useful in identifying trends and forecasting performance on certain page types.

For example, if you create and publish a well-optimised product page that is first pinged by crawlers, then starts seeing upticks in impressions and organic traffic after a certain period, this can be a great model for forecasting performance and getting buy-in for future content ventures.

Unlocking growth with log file analysis

While the means of assessing log files in detail across thousands of URLs for large websites may be a daunting venture from a technical analysis point of view, the findings and subsequent strategy learnings can be huge for SEO. Log file analysis leads you straight into the crawl behaviour of Googlebot (and other crawlers), and getting close to how search engines interact with your site can be hugely valuable in arriving at decisions that drive sustainable organic growth.

About the author

Michael Carden-Edwards is SEO Strategy Lead at Reddico. A seasoned SEO and digital marketing expert with 13+ years of experience, Michael has directed SEO strategies for major brands like British Airways and O2 as well as conducting countless public and internal SEO training sessions. Based in Sevilla, he joined Reddico in 2021, enjoying the flexible working and unique culture from a sunnier climate.

Read more News & Insights >

Sign-up to Reddico News

To keep up-to-date with the latest developments in the world of SEO, our insights, industry case studies and company news, sign-up here.