Ecommerce Core Web Vitals Study

Google kicked every ecommerce team into gear last year, by announcing that Core Web Vitals will become a ranking signal for their search results. The rollout has been delayed until May 2021, whilst the world concentrates on beating COVID-19, but the threat to many ecommerce websites is very real.

Ever since the days of Boo.com, online retailers have focussed more on the “buying experience”, than the web experience.

But what are the “Core Web Vitals” and how will they affect the SEO traffic (and ultimately the revenue) of online retailers this year?

What are Core Web Vitals?

In simple terms, three metrics to gauge the performance and usability of an individual web page. Every page on your site can have different scores, unless they’re a carbon copy of another page. The three metrics used by Google are:

Largest Contentful Paint (LCP) - The time it takes for the largest piece of content on a page to become visible. If you’re Wikipedia, this would be the text content on a page. For an ecommerce site though, this could be a large banner/hero image, product image or even a section of customer reviews that are loaded using JavaScript.

First Input Delay (FID) - The time before a page visitor can interact with a page. Using Wikipedia as an example again, this will be very fast, as the pages are simple and use very little JavaScript. For an ecommerce page though, it may need to load JavaScript before a link can be clicked, a form filled, an item added to the cart, a navigation menu dropdown appearing or an image gallery “Next” button working.

Cumulative Layout Shift (CLS) - The amount that elements move unexpectedly on a page. This factor is about usability rather than speed. An ecommerce site could have a floating banner bar at the top of a page, with a discount code or offer. If that bar appears after the page first starts to load (i.e. using JavaScript), it could push down the rest of the content and cause frustration to a user who may be trying to read the page or click on a link.

Why do they exist?

It all started with a “Page Speed” browser extension, built by the engineering team at Google in 2009. It allowed developers to find issues with their web pages, which were slowing their sites down. This evolved into a module for web servers, that automatically optimised HTML pages before they were loaded. Despite Google seeing a 25%-60% increase in performance on sites that followed their advice, only a small fraction of sites took their advice. Google tested the laziness of website builders even further, by offering a “Page Speed Service”, where you simply point your domain name at Google and they did all of the hard optimisation work for you.

The problems were twofold in online retail. In-house ecommerce managers were being pushed to innovate - to give a reason for people to shop with them rather than their high street rivals and new online competitors such as Amazon, ASOS and AO. Their digital agencies were also under pressure to innovate, evolve and use the latest technology to achieve this.

Google was partly to blame for this uneasy website evolution. YouTube’s endless free content paved the way for video online, so users began to expect video content and ecommerce stores felt that they must oblige.

Google also developed the AngularJS framework in 2010 (later inspiring React and Vue), which led the way for JavaScript-powered “Single Page App” websites. The technology was aimed at web applications and dashboards hidden behind a login screen, to give them a mobile app experience. But developers embraced the new tech and built ecommerce websites using it, to impress their clients and justify yet another website rebuild. The problem was that no search engines, including Google, were able to read the fancy new app-like websites. At that point, JavaScript was only examined to discover new links and blackhat cloaking - executing the code to render a page was experimental at best and rarely used. The big three search engines all now crawl web pages using a web browser, to support JavaScript-driven websites and understand what users see, at a significant added cost to them and the environment.

The same year that AngularJS launched, Google announced that the speed of a page would become a ranking signal (but only affecting ~1% of websites). Attempts to get web developers to build smaller and faster web pages were having the opposite effect, so Google made these damning reports more visible to non-techies.

The renamed “PageSpeed” (no space) metrics became more accessible to everyone via the PageSpeed Insights tool. Google’s Webmaster team started pushing PageSpeed as an important metric to SEOs, who were already well-equipped and experienced with battling against developers and poor website builds. In 2019, “Speed” reports were introduced to Google Search Console, putting worrying “POOR” charts of red in front of SEOs and in-house digital marketers.

The oversimplification of PageSpeed’s Good / Needs Improvement / Poor labels was too opaque, however, and the underlying data too baffling for most to understand. So in 2020, Google announced “Core Web Vitals” - three core metrics that websites will be judged on and website builders can work to improve.

Core Web Vitals for the top 500 retail brands

To get a good picture of which brands will be affected by the Core Web Vitals ranking signal update in May, we audited the homepage of every ecommerce website in RetailX’s 2021 Top500 Retail Brands league table. By using this list of top online retail brands, we get a more varied and typical view of the UK's ecommerce landscape, unlike sources such as Alexa, SimilarWeb and SEMRush, which would show bias towards brands already performing well in organic search.

Method

RetailX’s league table didn’t include website addresses, so we manually Googled and collected the homepage URL of every Top500 brand mentioned. We then used our in-house web crawler to crawl our list of 500 homepages and generate a PageSpeed report using Google’s API. This report included lots of useful information, including the Core Web Vitals metrics. Both the web page crawling and the PageSpeed report were conducted as mobile users, as the vast majority of UK’s websites are crawled by Google’s mobile/smartphone bot.

There were some (19) holes in our crawl of the Top500 retail brands. These were either Grocery websites (Ocado, Morrisons et al) that put Googlebot into an online queue or brands that 302 redirect Googlebot elsewhere, because of the US IP address. This is what Googlebot really sees when indexing the brand websites though (the PageSpeed API uses a Google IP Address and User Agent), so the brands will be suffering from a lot of SEO issues because of it.

PageSpeed Scores

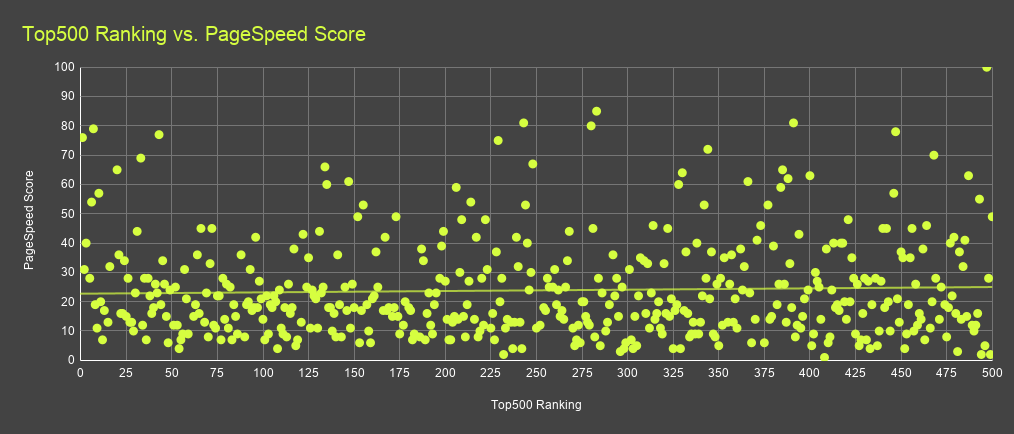

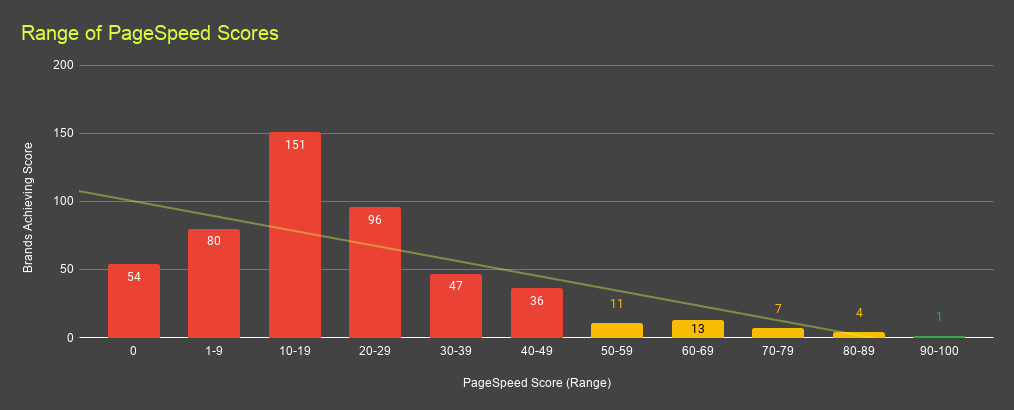

We first analysed the PageSpeed scores of our Top500 brands, which includes Core Web Vitals and other metrics. It’s the easiest metric to compare, as it gives each page a score out of 100, based on how well or badly a page performed for each underlying metric.

As you can see in the chart above, there is no correlation between a brand’s position in the Top500 list and their website performance. If anything, it shows how poorly so many ecommerce websites are performing still, with the average PageSpeed score sitting at 24/100. The top-scoring homepage (with a perfect score of 100) was Wish.com, which comes in at 497 in the Top500 list. This perfect score is quite easy for their homepage to achieve though, as it simply consists of a login screen.

What’s more worrying is that Wish.com was the only website out of 500 to achieve a “Good” score. The majority of homepages were scored as “Poor” (red in the chart above) and only 35 achieved a “Needs Improvement” rating (yellow).

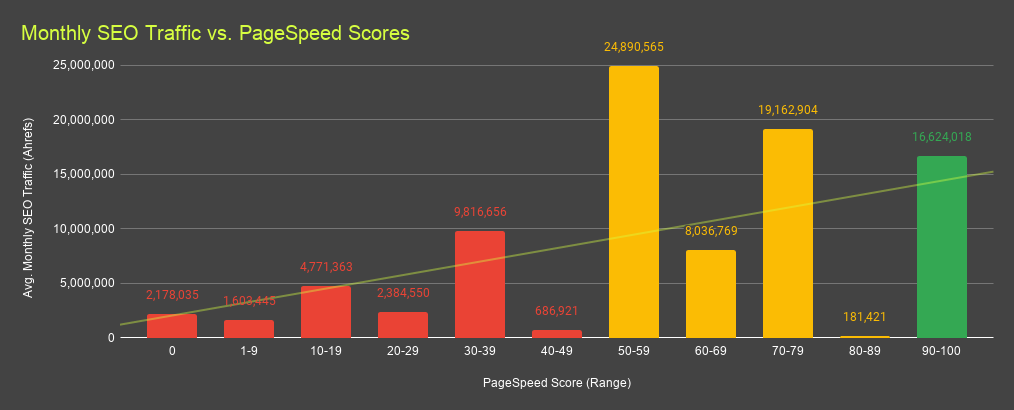

There’s a shimmering light at the end of the tunnel for ecommerce teams planning to improve their PageSpeed and Core Web Vitals: a slight correlation between a higher PageSpeed score and higher monthly organic/SEO traffic (traffic data from Ahrefs). This is likely because websites with higher organic search traffic are more likely to have an SEO agency or in-house team, who would have been championing PageSpeed improvements already.

RXUK Top500 Rank | Brand | PageSpeed Score |

497 | 100 | |

283 | 85 | |

243 | 81 | |

391 | 81 | |

280 | 80 | |

7 | 79 | |

447 | 78 | |

43 | 77 | |

1 | 76 | |

229 | 75 |

Looking at the 10 best-performing homepages, the presence of Amazon probably isn’t any surprise to most. Perhaps more surprising are the smaller brands outperforming many ecommerce giants (in PageSpeed terms).

Digging deeper into Guitarguitar

Ranked 283rd in the Top500 list, Scottish-born guitar shop Guitarguitar has shrunk from 99 employees in 2016 to 55 employees in 2021 (according to Craft and LinkedIn). But their revenue has increased year-on-year, most likely from ecommerce sales.

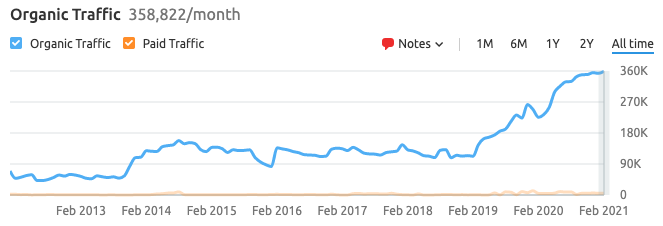

Source: SEMRush

This growth can be seen in SEMRush, which shows very little PPC spend, but a huge growth in SEO rankings and traffic from 2019.

Source: Ahrefs

The website also seems to have done a "possible" big link clean-up in 2018, followed by an active link building campaign in 2019.

But most impressive is the website itself, which appears to have been custom built in-house using ASP.NET, led by a former Citibank engineer with extensive ecommerce experience. By custom building the site, they have avoided the technical debt that occurs with hosted and generic ecommerce platforms such as Magento. When software is built for everybody and with every feature, the features that aren’t used/needed by one site, can just slow it down. They have ignored the latest technology trends and frameworks, using the bare minimum JavaScript required to make the site work as it should. A lack of code waste results in a fast and stable page loading experience.

This seemingly “Mom and Pop” shop has made all the right moves and is going toe-to-toe with Amazon and other retail giants. Guitarguitar.co.uk ranks #3 for [guitar] in the UK, only outranked by Wikipedia and fellow niche music shop, Gear4Music.com.

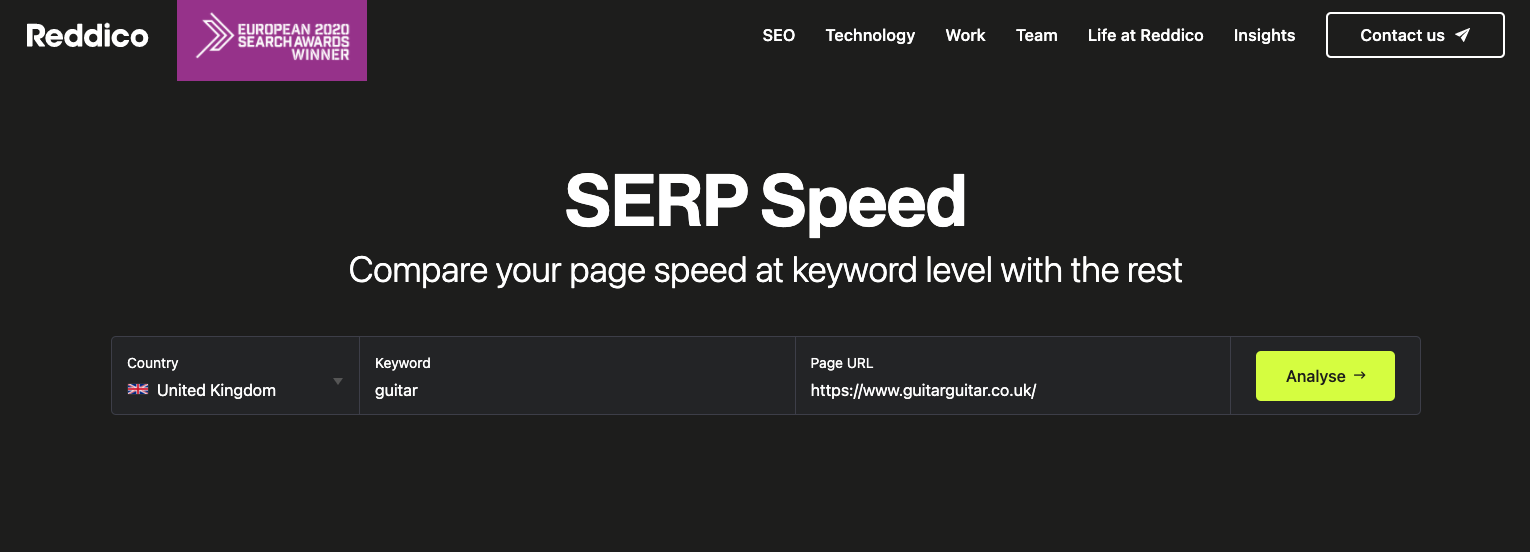

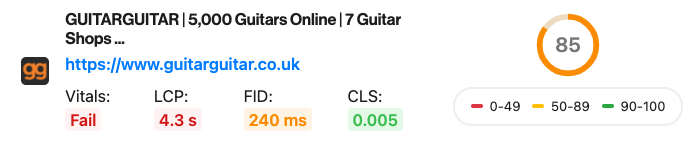

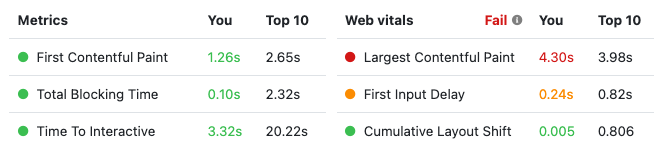

We then used our SERP Speed tool, to see how the Core Web Vitals ranking update will affect Guitarguitar.co.uk. Despite them achieving the second-best PageSpeed score amongst the Top500, they’re not out of the woods and not even classified as “Good” by Google.

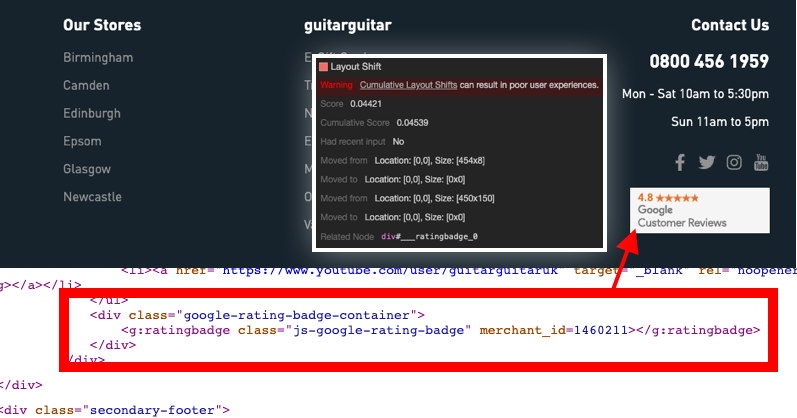

Because of their minimalist approach to JavaScript functionality, the Cumulative Layout Shift score is good at 0.005, but Gear4Music’s is better (perfect) at 0.00. Ironically, Google itself is the biggest disrupter to Guitarguitar’s perfect CLS score.

Their “Google Customer Rating” badge is loaded via JavaScript without any fixed dimensions at first, causing the page’s footer to move down once the badge is loaded. This problem will affect every business displaying Google’s rating badge, so should be addressed by the tech giant. In the meantime, Guitarguitar can fix it by putting fixed “width” and “height” attributes to the parent <div> tag around the badge. This will reserve the space for the badge, whilst waiting for Google’s badge code to load, preventing any layout shift.

The FID (First Input Delay) score is worse at 240ms (100ms is a pass and 300ms is a fail). This is a hard one to measure, as it’s purely based on Field Data - real-world user data from Google Chrome browsers (CrUX). The browser reports on how quickly someone is able to click, type or interact with a page. Only Google has access to the full data, so the nearest metric that we can measure is TBT (Total Blocking Time). We can see the TBT on our SERP Score report, but it’s in the green (Good), at 0.10s:

Source: Reddico SERP Speed tool report for Guitarguitar.co.uk

As TBT data comes from Google’s crawler and FID comes from Field Data (people), it’s possible that some real users are struggling with loading the page more than Googlebot does. This can sometimes be caused by JavaScript code that only loads for specific users, such as Country Detection, A/B Testing and UX/RUM systems. There’s a reference to HotJar in their source code and also a Live Chat app, so these would be the first things to look at.

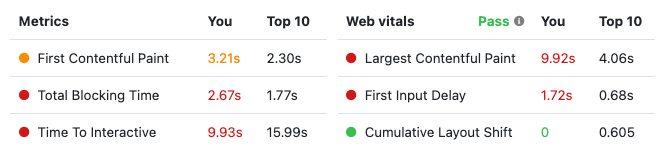

All scores are relative though, particularly as a ranking factor. Running a SERP Speed report against Gear4Music on the same [guitar] keyword, we can see that they have an awful lot more to worry about, with FID (First Input Delay) and TBT (Total Blocking Time) both in the red (Poor):

Source: Reddico SERP Speed tool report for Gear4Music.com

With a similar CLS score to Guitarguitar, a FID score that’s seven times worse than Guitarguitar’s and an LCP that’s more than double, Gear4Music must be hoping that the Core Web Vitals ranking update is a small one. Otherwise, they may well lose their #2 ranking to Guitarguitar.

Guitarguitar was given a “Fail” for LCP (Largest Contentful Paint) as well though, recorded at 4.30s, with a pass mark of 2.50s. This is caused by the large images in the homepage’s rotating carousel at the top of the page. Even though the site uses “lazy loading” on hidden images, the page’s large initial hero image takes time to download on slower connections and can make the overall page feel slow, because it takes up so much of the visible screen.

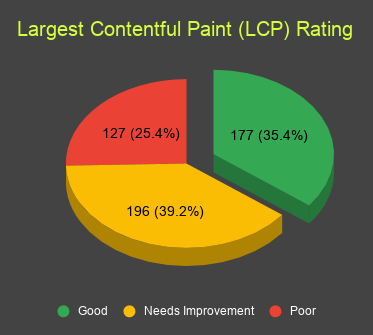

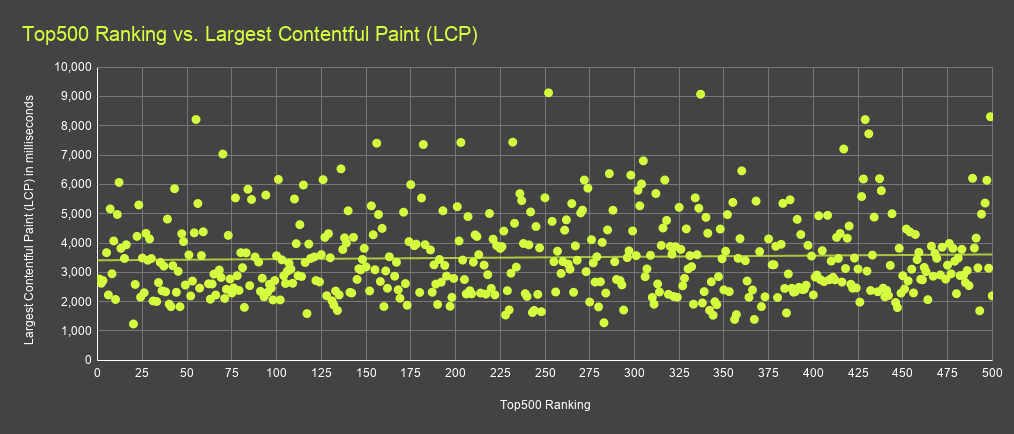

Largest Contentful Paint

LCP scores amongst our Top500 retail brands ranged from 1.2 seconds, up to 9.1 seconds, and averaging out at 3.5 seconds. Needing 2.5 seconds to get a “Pass”, most of our sample failed or needed improvement. The biggest causes of poor LCP scores in ecommerce websites are large images and JavaScript embedded content (such as reviews from a third-party platform). Ecommerce websites shouldn’t look like Wikipedia, so struggle to balance the visual appeal needed to attract sales with fast loading times. |

|

Snow+Rock performed well for LCP, as they used “Lazy Loading” on offscreen (not visible) images. They also implemented the “srcset” attribute on larger images, giving mobile and tablet devices the option of loading smaller versions of the images. Outside of the main “hero” image, they also opted for lots of small well-optimised images, rather than larger media formats, that can make the page feel slower.

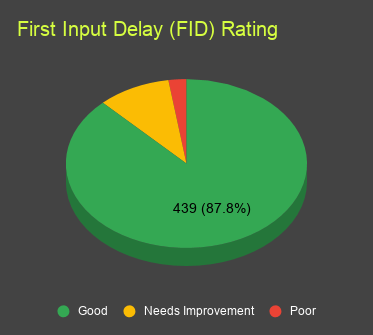

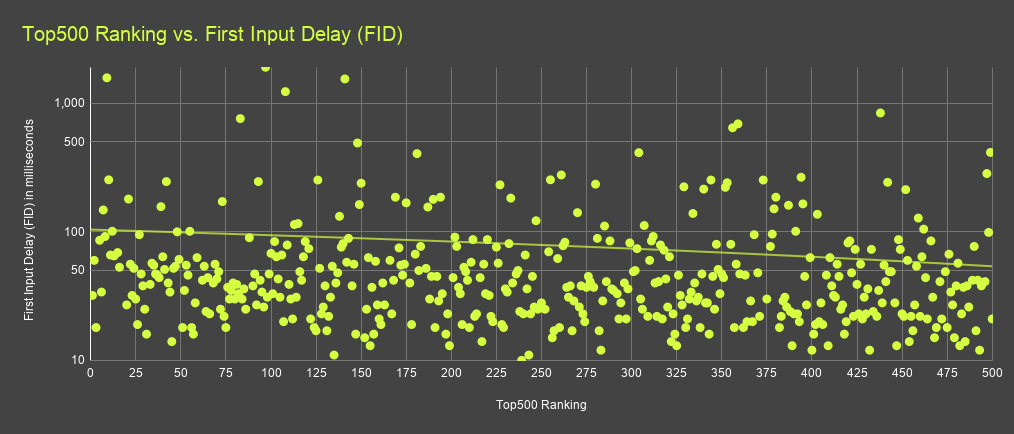

First Input Delay

First Input Delay (FID) varied dramatically between websites - so much so that we had to use a logarithmic scale for our chart below.

TheWorks.co.uk broke our chart, with an abysmal FID score of 1,909ms, followed by DIY.com (B&Q) at 1,579ms and Sally Beauty at 1,548ms. With the pass mark at 100ms, TheWorks.co.uk is almost twenty times slower than the level deemed acceptable by Google. Even B&Q and Sally Beauty are five times slower than the “Slow” rating.

TheWorks appears to have been designed by someone who has only designed paper brochures before and has a very fast internet connection. Its homepage loads over 280 media assets, mostly imagery. There is little textual content on the page, as it’s all in the images, so it’s hard to start using the website until everything has loaded (most of the links are images too). If they implemented Lazy Loading and “srcset” on their pages (as recommended above for LCP), it would at least allow mobile users to load their page and start interacting (plus fix our chart).

For most sites (such as B&Q), poor FID scores are caused by using too much JavaScript, which must finish executing before the page can be interacted with. The more fancy interactivity that’s added, the worse the FID scores become.

The better-performing sites such as Majestic Wines (13ms) and Overclockers (11ms) often use bespoke or niche ecommerce platforms, where most of the code and features loaded are necessary (less redundant/bloated code). Whilst they all use JavaScript to varying degrees, they don’t rely on it or hold up the page to load it. But the good news is that a majority (88%) of our Top500 websites passed, with FID scores lower than 100ms. 10% needed improvement and only 2% failed. |

|

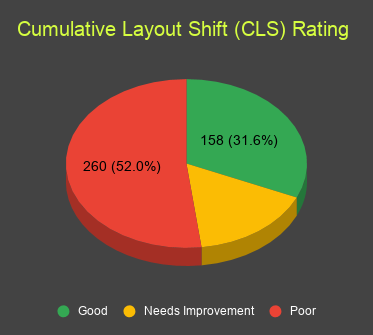

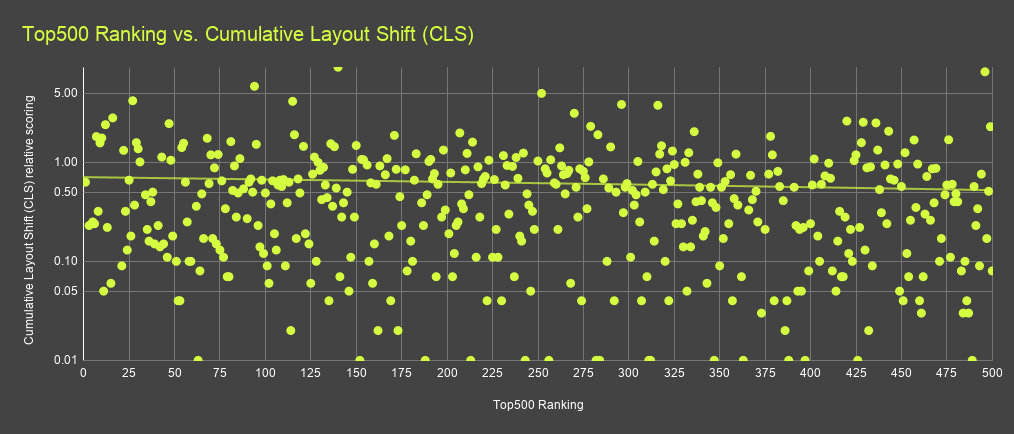

Cumulative Layout Shift

If you’ve loaded a recipe for pancakes or looked up the half-time scores on your phone before, only to accidentally click on the wrong thing when an advert pushes the content down, you’ve been a victim of Layout Shift. Google considers it a bad enough problem that it makes up one of the three Core Web Vitals.

Ecommerce websites in Europe tend to suffer a lot more from CLS, as they have the mandatory “We use cookies” GDPR notices popping up, alongside all of the other on-page factors such as images not having fixed dimensions and AJAX content not having any set height or width. On the ASOS mobile homepage, for example, someone wanting to click “Shop Men” could easily click “Shop Women” by mistake, as the cookie disclaimer pushes the whole page content down after loading. |

This is why ASOS fails the CLS test, with a rating of 0.3 (below 0.1 is good and above 0.25 is poor). If they loaded the cookie disclaimer over the top of the page (absolute positioning) rather than pushing the content down, ASOS would likely pass with a “Good” CLS score.

ASOS aren’t alone with this issue, as more than half (52%) of the Top500 scored “Poor” for Cumulative Layout Shift on mobile devices. This is largely due to ecommerce platforms and design agencies not adapting to this new metric yet. Cookie disclaimers don’t have to hurt CLS though, and nor do promo pop-ups or image sliders. They just have to be implemented in a way that stops them from interfering with the other content, links and imagery around them. |

|

If you can, set fixed sizes (at least heights) for any content that’s loaded after the page first renders. That includes your TrustPilot badges, BazaarVoice review embeds, "Buy Now Pay Later" offers, and newsletter banner bars.

Nothing Lasts Forever

An important disclaimer on the Core Web Vitals page is rarely mentioned. Perhaps because it makes fixing the issues harder to sell to the brands and dev teams. Near the bottom of Google’s CWV page they state:

“Web Vitals and Core Web Vitals represent the best available signals developers have today to measure quality of experience across the web, but these signals are not perfect and future improvements or additions should be expected.”

Core Web Vitals are an evolving benchmark with which to grade website usability. Today’s “Poor” could be tomorrow’s “Good” and vice versa. Some of the metrics might be removed and others could be added. They will always reflect what Google considers important to the user, however. A slow initial page load will never come into fashion, and a hard-to-navigate page will not become the new “easy”.

CWVs might be the latest thing that SEOs are talking about right now, but PageSpeed has already been part of Google’s ranking signals for years. And any new metrics added to CWV scoring are likely to come from the existing set of metrics shown on a PageSpeed/Lighthouse report. So keep an eye on all of the PageSpeed metrics, not just LCP, FID and CLS.

We’ll find out in May 2021 just how important this ranking factor becomes. But one thing will be true for the foreseeable future - fast-loading, easy-to-use websites are better for everyone, including an ecommerce store’s revenue.

If you would like to test your Core Web Vitals at a keyword level, we have a free SERP Speed tool to help you with that.

Sign-up to Reddico News

To keep up-to-date with the latest developments in the world of SEO, our insights, industry case studies and company news, sign-up here.