Our culture revolution. Part 10: The 360 Review tool starts to take shape

We’re just a normal agency. You may own one. You may work for one. We’re ticking along nicely, picking up new business and growing at a good pace. The team size has upped from 1 to 20 in five years, with plans of reaching 50 by 2021. Everyone seems happy. But we want more.

Day Zero was the launch of our manifesto. Its aim? To revolutionise our culture, attract amazing talent, and be recognised nationally as a great place to work.

Over the course of the next few months we’ll be taking you to the heart of Reddico, sharing our highs, our lows, and our eureka moments. We’ll be honest and open about everything. What works. What doesn’t. Whether you’re here for inspiration, to watch us fail, or out of sheer curiosity, welcome along.

No hours. No managers. Rules set by the team. Let’s see what happens next.

Last week we introduced the concept of 360 reviews, detailing why they’re a fantastic opportunity to get valuable feedback from everyone you work with – not just your line manager.

Whilst they can sound a little scary (perhaps you don’t want to know what the person next to you thinks), an open communication line means there’s the potential to understand where your strengths lie and where you could continue to improve.

They’re not for everyone, and can have negative consequences if not handled carefully (take the Amazon example). But, we believe they’re the only true way to get a completely rounded appraisal.

Building a fit-for-purpose 360 tool

We knew we wanted to introduce 360s across the business. We knew we wanted them to be open. And we knew we wanted an option to choose your reviewers AND have reviewers choose you.

Not much to ask, right?

Researching the market there were some great tools out there, but nothing really ticked all the boxes. Even our current HR system offers 360 feedback, but only as part of a larger performance appraisal.

We didn’t want our 360s to be that rigid. There had to be fluidity in there.

It left us with one option – to utilise the skills of the team and create our own piece of kit. We already had ideas of what we wanted to include in such a tool, but developing a full review system with questions and scoring would be tough going.

We held an all-team meeting to discuss 360s and discover what the team would want to get from it, rather than it being a chore or taking too long to complete. This provided debate on whether it was best to choose your reviewers or have them choose you, with one of the main question marks being:

“Would people deliberately choose colleagues they know would give them a better score?”

However, the same reasoning was used as we’ve adopted throughout all the company changes this year:

“Trust in people to do the right thing and build your culture for the 98% who will do it right – not the 2% who won’t.”

That left us with a format of:

Quarterly 360 reviews

You choose three people to review you

One person can be chosen a maximum of five times

You can choose to review anyone (even if they don’t pick you)

With a tight timeframe to have a working v1 of the tool ready, the only guidelines for development were:

Self review before choosing peer reviewees

Multiple questions to assess each core skill

Option to choose a scale of 1-5 for answering questions

Potential open ended questions to provide further feedback

Opportunity to choose who reviews you

Opportunity for you to choose who you review

Nice-to-have: Graph to compare personal and peer reviews

With that in the hands of development, we were left with the small matter of determining 360 questions to complete the tool.

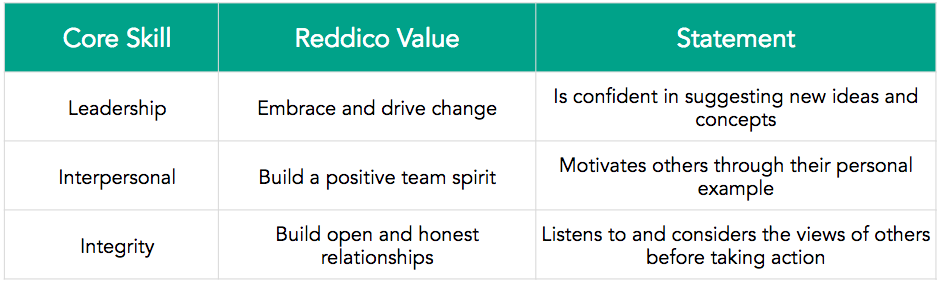

Reddico’s values and core job skills

I’d like to say there’s some method to the madness, but with a goal to continue reiterating the values and driving home their importance, it was clear they needed to play a significant role in our 360 tool – another reason why creating our own system with customisation was the preferable option.

Let’s look at the values again:

Make a real and meaningful impact

Build a positive team spirit

Pursue growth and learning

Build open and honest relationships

Be passionate, proud and determined

Embrace and drive change

Each of these can be attributed to a core skill you would need in the workplace, with some being easy to link up:

Embrace and drive change – Leadership

Build a positive team spirit – Interpersonal

Build open and honest relationships – Integrity

Be passionate, proud and determined – Passion / pride (obviously)

This left us with two:

Make a real and meaningful impact – which was eventually linked to innovation.

Pursue growth and learning – struggled with this one, and for the v1 it was tied to commitment. However, in hindsight it felt a little odd and was redefined as self-motivation for v2.

That left us with six core values to be evaluated as part of the first round of 360 reviews.

Putting questions against core skills

The next part simply involved research and brainstorming, to put questions against the six core areas that were to be assessed. There were plenty that missed the final cut, with five chosen for each section.

These would be statements that could be assessed by providing a score of 1-5. Then, with work from the development team, an average for each section could be given.

Here are some of the questions from v1 of our 360 tool:

Open-ended questions

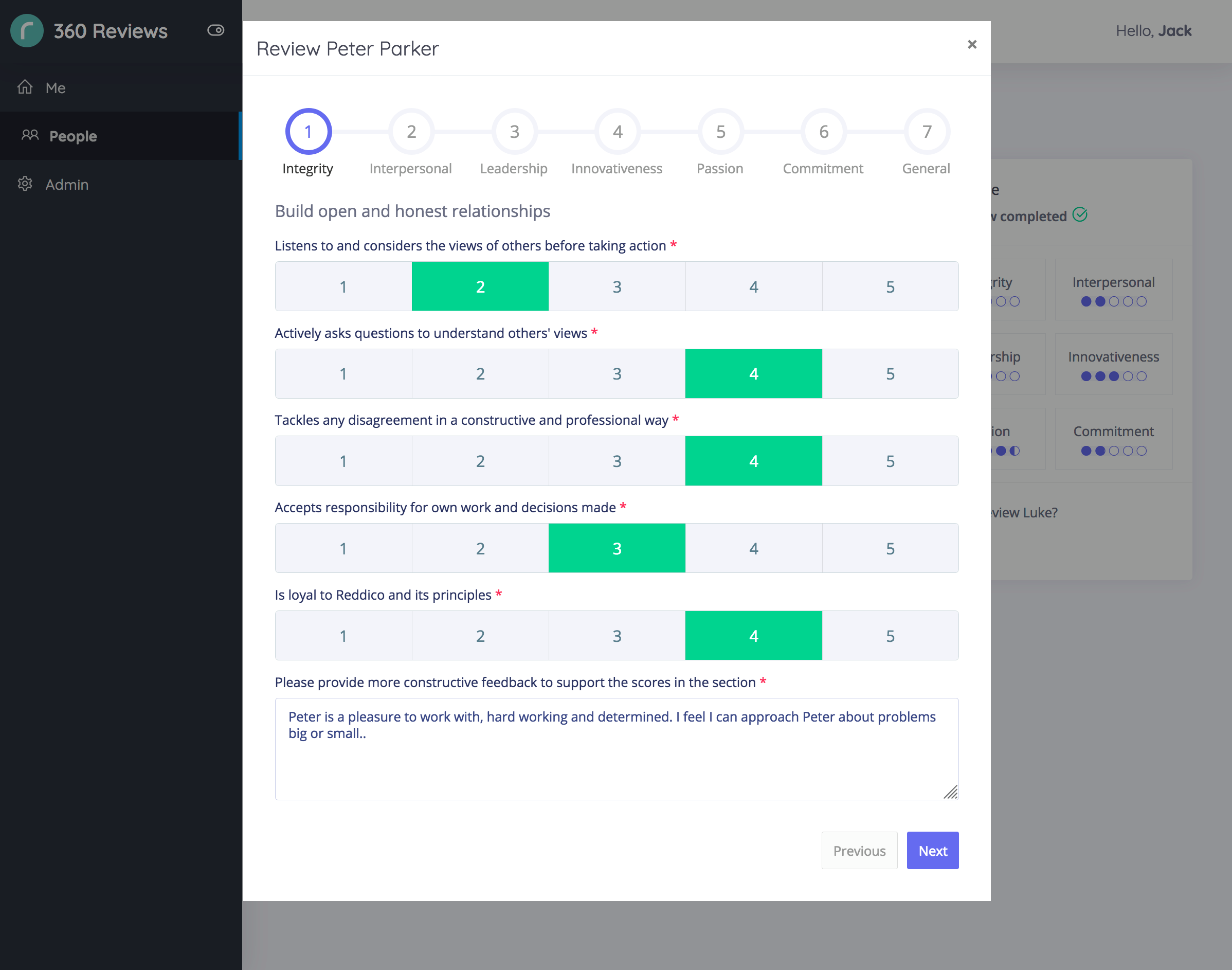

With six core areas for assessment and five statements against each, reviewers would be asked a total of 30 questions – which starts to look comprehensive. Just the type of rounded appraisal we envisioned from the outset.

However, by just providing a score, there wouldn’t be too much actionable feedback. Only an understanding of which core areas you were strongest in.

That’s where the open ended questions come into the picture.

For each skill / value, we wanted to include the following statement:

Please provide more constructive feedback to support the scores in the section

This would help to summarise each section and provide clarity as to why a certain score was given.

Still, there was more we could do to make this even more actionable and focussed on helping the team to analyse strengths and weaknesses. So, at the end of the survey we opted for these two questions:

What strengths do they show, that you would like to see more often?

What would you like them to improve on, or start doing?

It’s easy to write that question differently – what weaknesses should they look to improve / address. However, just a subtle change in approach and it goes from being a positive assessment to one with negative connotations. That’s something we were very keen to avoid.

This approach would be much more likely to encourage positivity.

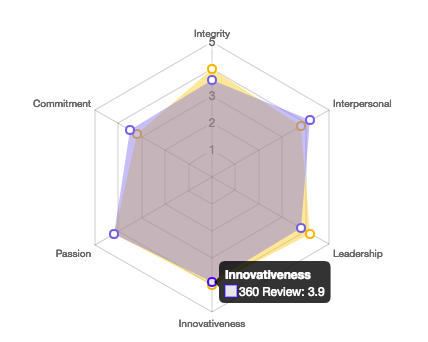

Our 360 review tool – v1

As promised, here’s a small insight to version one of our 360 review too. There’ll be more included next week as we share some of the tweaks and changes made following feedback from the team.

Graph comparing a self-review to the review of peers. Hovering over each section presents the average score (out of 5) achieved from the three team reviews.

The original form, with a section dedicated to each value / core skill. Reviewers were asked to choose from one to five, before providing further information.

What’s next?

Next week we wrap up our 360 reviews (for now). We’ll be looking at how the first set went, taking feedback from the team on improvements we could make, and rolling out the updated v2.

We’ll also be talking about our plans for 360s in the future, both internally and externally. Do we need to keep a rigid structure of carrying these out quarterly, or could it be more fluid? Can we commercialise the tool and offer something comprehensively better than the alternatives out there?

Sign-up to Reddico News

To keep up-to-date with the latest developments in the world of SEO, our insights, industry case studies and company news, sign-up here.