Prioritising Google Search Console insights for larger brands

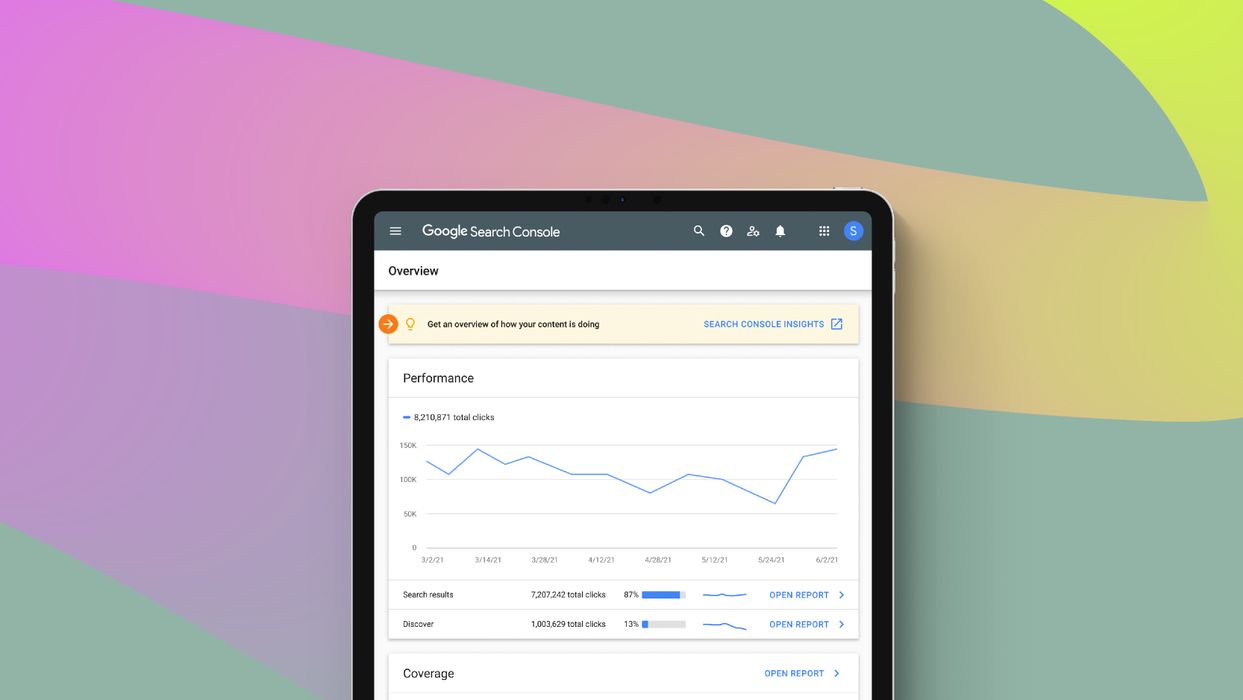

Google Search Console is sometimes neglected in favour of third party SEO suites that provide us with more comprehensive and readily actionable data on the technical SEO makeup of our websites. This can often be the case for in-house or agency SEOs working on larger enterprise or ecommerce sites where a nuanced look at data is required.

On top of this, the migration to the “new” Google Search Console in 2019 saw many SEOs initially disappointed with what appeared to be a watered-down version of the old Search Console in terms of data availability and overall functionality, albeit with a more user-friendly interface. While the team at Google have consistently rolled out highly useful reporting features in tune with modern SEO approaches, the apparent lack of depth and inconsistency in the data and insights typically leave many to rely on third-party tools for day-to-day site health monitoring.

But are we taking for granted what is, in reality, a free and legitimate tool fresh from the codebase of Google itself? When GSC is discussed in the SEO community, it’s often in the form of screenshots of graphs showing SEO success shared on social media taken straight from GSC’s “Performance” section. The indexation tool also makes a frequent appearance, albeit in the context of people showing confusion and at times frustration with it either being down for long periods of time or at ends with what our other tools are telling us.

Yet regardless of what type of site we’re working on, there are still some real data gems within Google Search Console worth mining. From the view of the large site owner who has data and insights coming out of their ears, we’re going to use this guide to take a look at how to get the best out of Google Search Console in terms of clear, actionable reporting and analysis.

Getting the best out of the Index Coverage Report: Valid & Excluded

To get an understanding of how Google is interpreting our website from an indexation point of view (with the aim of identifying errors), let’s firstly navigate to the “Coverage” tab. This is under the “Index” section of the left-hand menu of GSC.

For established websites, even with strong and steady SEO programmes behind them, you’ll often be surprised at what you can mine from this section and dig up in terms of indexation issues and errors across key pages you weren’t aware of. This becomes particularly important for large-scale websites (especially ecommerce sites) where indexation management becomes more prevalent and a cornerstone of your technical SEO.

In the Coverage report, we’re greeted with four tabs: Error, Valid with warnings, Valid and Excluded. Now there’s a lot to take in here with regards to identifying the overall indexation makeup of our site, so we’re going to focus on some particular areas of this report which are most useful in finding actionable insights. This may inevitably start with the “Error” tab, though in this instance we’re going to flip the report on its head and look at the “Valid” tab to identify issues.

The “Valid” tab and being hawkish over indexation

The below “Valid” report shows the number of indexed URLs on Google along with a trended impressions line graph against this:

As SEOs, part of our job is to be sceptical. You can see from the example above, there has been a drop in impressions (and therefore likely clicks) despite a largely consistent flat line in URLs being indexed. This would suggest a drop in rankings on our indexed URLs and likely overall quality issues of those URLs that are indeed indexed.

From this, we can head over to the “Performance” tab and quickly identify a list of URLs that have dropped in impressions and clicks over the same time period. This may also suggest it may be worth reassessing our list of indexed URLs (given in the above example little has changed) to see if there are any opportunities for crawl budget optimisation.

Another reason to keep an eye on the “Valid” tab, aside from the inevitable drop in indexed pages and impressions, would be to see if there is a sudden increase in indexed URLs. Unless you’ve overseen a large product launch and are aware of new sections of the website going live, a sudden increase of indexed pages is worth investigating.

Take an ecommerce brand for example. Perhaps some duplicate content pages or unwanted parameter URL pages have for some reason become indexed. Clicking through these on Search Console will show you a full list, though it’s worth doubling up with a crawling tool like Screaming Frog to get a bigger picture as to what is indexed and what (potentially) shouldn’t be.

Mining for potential gems in the “Excluded” tab

Every site will have pages blocked from Google’s index, be it a duplicate content page or a gated part of the website only users can log in to. However, the “Excluded” tab is a great area to dig out quick indexation wins where you could be unintentionally missing out with URLs that potentially have value from both a search engine and user perspective.

Looking at the above example, let’s look into a couple of these “Excluded” areas that could reap some interesting rewards.

Page with redirect: URLs that are redirects typically won’t be indexed – or at least will eventually drop out of the index, though there is a high number of these on the above website example. It’s worth checking if these pages that redirect have a high number of internal or external links pointing to them using an external SEO tool. If that’s the case, then it’s worth repointing those links to their correct destinations. This could form part of a wider redirect chain audit across the site – redirect optimisation opportunities are prevalent within larger, older domains that have gone through various migrations.

Crawled - not currently indexed: Click through to this and you’ll typically be presented with a list of URLs you wouldn’t want indexed anyway, like author admin pages (if you’re using WordPress) and unwanted parameter URLs. However, it’s worth analysing this list in detail to identify if there are any URLs you may want indexed, such as possible category page URLs that need content added to them to improve their quality and ranking credentials. If that’s the case, check whether they are in the index by using a site search on Google. If they’re not, take the necessary steps to make the URL indexable by adding it to your sitemap, checking that it's not blocked by robots.txt, has a noindex tag or is canonicalised to another page

Submitted URL not selected as canonical: This is worth checking as it denotes URLs that Google has excluded from the index. This happens as it deems other URLs more worthy, likely due to similar and better content on other pages. It’s worth delving into this list to see if there are any URLs here that you may want indexing and provide opportunities for ranking in their own right, following content optimisations.

Discovered - not currently indexed: These are worth checking on a regular basis, particularly after product launches or migrations on the site. This is where we can identify URLs that may be proving stubborn on the indexation front and are worth examining for potential issues in this regard.

Remember to make use of the “Inspect URL” tool within GSC to assess and request indexation. On the flip side, the “Removals” tool in the Index menu on the left can be used to remove URLs on a temporary basis. For permanent removals update your robots.txt file or manage URLs with the noindex tag accordingly.

Ascertaining Quick Wins from the Core Web Vitals Report

While site speed optimisation has been a cornerstone of SEO for some time, the introduction of the Core Web Vitals report within Google Search Console suggests that Google is putting some weight behind the current June 2021 rollout in terms of the potential impact on SEO performance. While it’s hard to say how big an impact this will have on the SERP when the switch is flicked, the amount of discussion and tooling released surrounding Core Web Vitals suggests it requires due attention.

The Core Web Vitals report can be a pretty intimidating thing at first glance, particularly if you’re looking at a sea of red.

For larger websites, it can be hard to know where to start. Clicking through any given URL housed in the “Poor” list under one of the three Core Web Vitals metrics will provide you with a brief overview as to why it is being flagged, as well as the option to click through to PageSpeed in order to delve a bit further.

If you’re seeing a lot of URLs in the red, this can be a bit overwhelming in terms of prioritising your Core Web Vitals optimisations. From this, it’s suggested to double up an export of these “Poor” or “Need improvement” URLs with a larger crawl of your site using Screaming Frog and its PageSpeed insights and Google Analytics APIs.

While interesting insights can be garnered from using the PageSpeeds insights API in its own right, we want to marry this data up with traffic and goal data from our GA properties to prioritise accordingly. Before you start your crawl, head to the Configuration>API access to first integrate your GA property. To connect PageSpeed insights, you’ll need to generate a free API key from Google.

Once both of these are set up, run your crawl as usual. You can start to apply filters and identify opportunities on slow performing pages that are business-critical in terms of traffic and conversions, potential or actual.

Slicing and dicing the Performance Report for quick and useful insights

Whether you’re in-house or agency side, a typical challenge on larger, more enterprise-sized websites is the frequent need to communicate SEO success and opportunities in a top-level, digestible manner that makes sense to senior stakeholders. This is often on an ad hoc basis. So, with that in mind, the final part of this guide will address ways in which you can quickly slice and dice elements of the “Performance” section of GSC to communicate these.

Find areas where traffic has increased or decreased over time: head to the “Performance” tab and change the date filter to “Compare”, and select your desired dates of comparison. Ensuring you have selected “Total clicks” within the report, head down to any of the “Queries”, “Pages”, “Countries” or “Devices” tabs and toggle the arrow denoting “Clicks difference” to view a list of any of the above metrics where traffic has increased or decreased over your selected dates. This is primarily useful for identifying individual URLs (“Pages”) where traffic may have dropped or picked up for whatever reason.

Identifying queries that drive the highest CTR to our site: Reset the filters on the performance report and simply click on the “Average CTR” tab. Select your desired date range. Then head to the queries tab and toggle the arrow next to “CTR” on the far right to view which keywords drive the highest (or lowest) clickthrough rate. This is often an overlooked part of GSC and can help SEOs identify keywords with healthy search volumes that their sites rank well for, but are giving little joy in terms of return in traffic. If you’re exporting this data, a good tip is to exclude your branded queries from your data rows as these will typically drive high CTRs by their nature. The real opportunity here is to identify potential wins on unbranded queries.

The same can be done for identifying keywords that drive the highest or lowest amount of traffic if you toggle “Total clicks” in the report.

Additionally, discover which countries are driving the most traffic or CTR to your site by exploring the “Countries” tab.

Identifying keyword ranking fluctuations: Many SEOs will rely on more comprehensive reporting keyword ranking tool sets for this type of reporting, though GSC does offer fruits in this regard. Change the date filter to “Compare” and input your desired data comparison range. Head to the “Query” tab, ensure “Average Position” is selected and click on the arrow next to “Position Difference” to identify your keyword gains and losses.

RegEx Filtering: A relatively new feature in Search Console, you can now filter data with RegEx (Regular Expressions). This is, frankly, a game-changer. Previously the filtering options in Search Console were quite limiting, allowing you to either include or exclude one phrase, or a URL parameter/sub-folder. Now, with the power of RegEx, you can filter data on a much more precise level. For instance, say you wanted to get a view of non-brand keyword traffic to your site. Previously, you could sort of do this but it would be imprecise and not account for misspellings of your brand terms. Now, you can filter out the core brand terms as well as all of the frequent misspellings of it. See below for an example of such filtering.

The possibilities afforded by RegEx filtering are yet another string to Search Console’s proverbial bow, making it an indispensable tool for SEOs and webmasters more generally.

Looking Ahead

There are plenty of other comprehensive technical SEO tools out there that can give us valuable insights, and as a result Search Console is often neglected as part of an SEO’s day to day auditing and monitoring. Every brand will of course have its own set of priorities and success metrics for SEO which certain tools may answer, though there are plenty of gems you can mine from within this free tool. Knowing where to look is key, and hopefully, some of these pointers will help you leverage the platform better on a regular basis.

What’s more, Search Console is constantly having new features added to it. For instance, at the time of writing a new addition to the platform is being added, entitled ‘Search Console Insights’: https://search.google.com/search-console/insights/u/0/about. Described by Google as providing ‘content creators with the data that they need to make informed decisions and improve their content’. This in many ways sounds like a description of what search console does already, but some additional functionality highlighted by Google certainly sounds helpful in terms of the array of insights you can glean from the platform:

What are your best-performing pieces of content?

How are your new pieces of content performing?

How do people discover your content across the web?

What do people search for on Google before they visit your content?

Which article refers users to your website and content?

In a marketplace where SEO tools are often expensive and may sit outside of budgetary constraints, Search Console presents itself as a genuinely useful answer to such financial conundrums. From ranking data to keyword-level insights - using Search Console day-to-day will help you understand your organic traffic in a more nuanced and actionable level.

Sign-up to Reddico News

To keep up-to-date with the latest developments in the world of SEO, our insights, industry case studies and company news, sign-up here.